Redo Transport Service:

§ Automates the transfer of redo to one or more destinations

§ Resolve gaps in Redo Transport in case of network failures

FIG 5-1: Oracle Dataguard (B14239-04)

Destinations types for Redo Transport Service:

§ Oracle Data Guard Standby Database

§ Archived redo log repository

§ Oracle Streams real-time downstream capture database

§ Oracle Change Data Capture staging database

LOG_ARCHIVE_DEST_n parameter:

§ Number of destinations maximum (10)

§ Number of Standby Database configured max (10)

Attributes:

§ Location= specifies the local destinations

§ Service= specifies remote destinations

LOG_ARCHIVE_DEST_n is used along with LOG_ARCHIVE_DEST_STATE_n parameter.

Attributes: of LOG_ARCHIVE_DEST_STATE_n parameter

| ENABLE: | Redo transport services can transmit redo data to this destination. This is the default. |

| DEFER: | This is a valid but unused destination (Redo transport services will not transmit redo data to this destination.) |

| ALTERNATE: | This destination is not enabled, but it will become enabled if communication to its associated destination fails. |

| RESET: | Functions the same as DEFER |

Example 5–1 Specifying a Local Archiving Destination

LOG_ARCHIVE_DEST_1=’LOCATION=/arch1/chicago/’

LOG_ARCHIVE_DEST_STATE_1=ENABLE

Example 5–2 Specifying a Remote Archiving Destination

LOG_ARCHIVE_DEST_1=’LOCATION=/arch1/chicago/’

LOG_ARCHIVE_DEST_STATE_1=ENABLE

LOG_ARCHIVE_DEST_2=’SERVICE=boston’

LOG_ARCHIVE_DEST_STATE_2=ENABLE

We can change the destination attributes

SQL> ALTER SYSTEM SET LOG_ARCHIVE_DEST_2=’SERVICE=boston

VALID_FOR= (ONLINE_LOGFILES,PRIMARY_ROLE)’;

SQL> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=DEFER;

(This commands defers the Redo Transport Service)

§ The modifications take effect after the next log switch on the primary database.

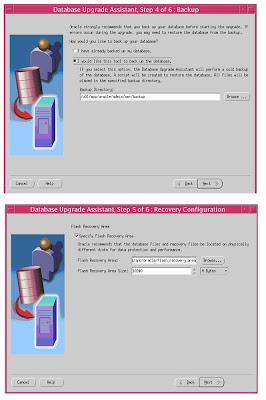

§ Parameter for configuring Flash Recovery Area is DB_RECOVERY_FILE_DEST = ‘/……’

§ If no destinations for local archiving are specified then LOG_ARCHIVE_DEST_10 is implicitly mapped to DB_RECOVERY_FILE_DEST location by Oracle Data Guard.

§ A Primary Database cannot write the redo data to the Flash Recovery Area of Logical Standby Database

Note: Flash Recovery Area is the directory to stores the files related to recovery.

§ To configure Flash Recovery Area to any other destinations other then LOG_ARCHIVE_DEST_10 use

§ LOG_ARCHIVE_DEST_9=’LOATION=USE_ DB_RECOVERY_FILE_DEST ARCH MANDATORY REOPEN 5

§ Specifying Flash Recovery Area as Physical Standby Database

STANDBY_ARCHIVE_DEST = ‘LOCATION=USE_ DB_RECOVERY_FILE_DEST’

§ Sharing a Flash Recovery Area between Physical Standby Database and Primary Database DB_UNIQUE_NAME should be specified to each database and it should have a unique name.

Example 5–3 Primary Database Initialization Parameters for a Shared Recovery Area

DB_NAME=PAYROLL

LOG_ARCHIVE_DEST_1=’LOCATION=USE_DB_RECOVERY_FILE_DEST’

DB_RECOVERY_FILE_DEST=’/arch/oradata’

DB_RECOVERY_FILE_DEST_SIZE=20G

Example 5–4 Standby Database Initialization Parameters for a Shared Recovery Area

DB_NAME=PAYROLL

DB_UNIQUE_NAME=boston

LOG_ARCHIVE_DEST_1=’LOCATION=USE_DB_RECOVERY_FILE_DEST’

STANDBY_ARCHIVE_DEST=’LOCATION=USE_DB_RECOVERY_FILE_DEST’

DB_RECOVERY_FILE_DEST=’/arch/oradata’

DB_RECOVERY_FILE_DEST_SIZE=5G

Sending Redo:

Redo can be transmitted by archiver process (Arcn) and log writer process (lgwr). But both cannot be used for the same destinations i.e... arcn can send redo to one destinations and lgwr to other.

Using ARCn to send redo

§ Default method & 4 processes are used by default

§ Supports only Maximum Performance level of data protection

§ Specify LOCATION attribute for local archiving and SERVICE attribute for remote archiving.

EX:

LOG_ARCHIVE_DEST_1=’LOCATION=/arch1/chicago/’

LOG_ARCHIVE_DEST_2=’SERVICE=boston’

Another parameter

LOG_ARCHIVE_MAX_PROCESSES (Dynamic parameter; Maximum is 30 process)

Archival Processing:

FIG 5-3: Oracle Dataguard (B14239-04)

Note: use v$archive_log to verify the redo data is received on Standby Database

Minimum 2 Arch Process are required default is 4 & maximum is 30

RFS: On the remote destination, the remote file server process (RFS) will, in turn, write the redo data to an archived redo log file from a standby redo log file. Log apply services use Redo Apply (MRP process1) or SQL Apply (LSP process2) to apply the redo to the standby database.

MRP: The managed recovery process applies archived redo log files to the physical standby database, and automatically determines the optimal number of parallel recovery processes at the time it starts. The number of parallel recovery slaves spawned is based on the number of CPUs available on the standby server.

LSP: The logical standby process uses parallel execution (Pnnn) processes to apply archived redo log files to the logical standby database, using SQL interfaces.

Using LGRW to Send Redo

- LGWR SYNC

- LGWR ASYNC

LGWR SYNC archival processing:

Parameter: LOG_ARCHIVE_DEST_n

Attributes: LGWR, SYNC,SERVICE

Example 5–5 Initialization Parameters for LGWR Synchronous Archival

LOG_ARCHIVE_DEST_1='LOCATION=/arch1/chicago'

LOG_ARCHIVE_DEST_2='SERVICE=boston LGWR SYNC NET_TIMEOUT=30'

LOG_ARCHIVE_DEST_STATE_1=ENABLE

LOG_ARCHIVE_DEST_STATE_2=ENABLE

SYNC: Network I/O is synchronous (default)

Waits until each write operation is completed

Note: if LGWR process does not work for some reason then redo transport will automatically shift to ARCn process.

NET_TIMEOUT: waits for specified seconds over the network & give error if write operation does not complete

LGWR ASYNC archival processing:

Ex: Same as above without SYNC & NET_TIMEOUT attribute

Use ASYNC instead of SYNC

NET_TIMEOUT is not necessary in ora10.2

Diagram showing SYNC & ASYNC LGWR archival process:

FIG 5-4: Oracle Dataguard (B14239-04)

FIG 5-5: Oracle Dataguard (B14239-04)

Note: LOG_ARCHIVE_DEST & LOG_ARCHIVE_DUPLEX_DEST should not be used for configuring Flash Recovery Area.

Providing security while transmitting redo:

Sql> Orapwd file=orapw password=xyz entries=10

Note: Make sys user passwore identical for all db’s in Oracle Data Guard. Also set remote_login_password_file=exclusive/shared.

VALID_FOR attribute of LOG_ARCHIVE_DEST_n parameter

VALID_FOR=(redo_log_type,database_role)

redo_log_type: ONLINE_LOGFILE, STANDBY_LOGFILE, or ALL_LOGFILES

database_role: PRIMARY_ROLE, STANDBY_ROLE, or ALL_ROLES.

VALID_FOR attribute is required for role transtition

- configures destination attrivutes for both Primary Database and Standby Database in one SPFILE

- If VaLID_FOR is not used then we need to user two spfiles each time we do the role transitions

- This attribute makes the switch-over and Fail-over easy.

Ex

LOG_ARCHIVE_DEST_1='LOCATION=/ARCH1/CHICAGO/ VALID_FOR=(ALL_LOGFILES,ALL_ROLES)'

DB_UNIQUE_NAME: Specified unique database name in Oracle Data Guard conf.

Used along with LOG_ARCHIVE_CONFIG.

Ex:

DB_NAME=chicago

DB_UNIQUE_NAME=chicago

LOG_ARCHIVE_CONFIG='DG_CONFIG= (chicago, boston)'

LOG_ARCHIVE_DEST_1='LOCATION=/arch1/chicago/ VALID_FOR= (ALL_LOGFILES, ALL_ROLES)

LOG_ARCHIVE_DEST_2= 'SERVICE=boston LGWR ASYNCVALID_FOR= (ONLINE_LOGFILES, PRIMARY_ROLE)

DB_UNIQUE_NAME=boston'

The LOG_ARCHIVE_CONFIG parameter also has SEND, NOSEND, RECEIVE, and NORECEIVE attributes:

- SEND enables a database to send redo data to remote destinations

- RECEIVE enables the standby database to receive redo from another database

To disable these settings, use the NOSEND and NORECEIVE keywords

Ex: LOG_ARCHIVE_CONFIG='NORECEIVE, DG_CONFIG= (chicago,boston)'

Use of these parameters can effect the role transition. Therefore trys to remove them before doing any role transitions

Handling Errors while transmitting redo:

Options when archiving fails

Retry the archival operations (control the number of retry operations)

Use an Alternate destinations

Ex: LOG_ARCHIVE_DEST_1='LOCATION=/arc_dest REOPEN=60 MAX_FAILURE=3's

Other parameters used:

REOPEN: default value is 300 seconds, 0 turns off this option

MAXIMUM _FAILURE: Maximum number of failures

ALTERNATE: Alternate Destinations

Note: Alternate take precedence over mandatory attribute; i.e.. even if the archiving destinations is mandatory and if it fails; the archiving automatically moves to alternate destinations.

DATA PROTECTION MODES:

| MAXIMUX PROTECTION | MAXIMUM AVAILIBLITY | MAXIMUX PERFOMANCE (Default) |

| - No Data loss if Primary Database fails -Redo data needed for recovery has to be written both to an online redo log files and standby redo log files before commit - Atleast one Standby Database should be available - If any fault happens Primary Database will shutdown - Configure LGWR, SYNC & AFFIRM attributes of LOG_ARCHIVE_DEST_n parameter on Standby Database | -Provides highest level of data protection without compromising availability of Primary Database -Primary Database doesn’t shutdown and continues to work in maximum performance mode until the fault is corrected -all gaps in redolog files are resolved and then it goes back to maximum availability mode. -Alteast on Standby Database should be available - Configure LGWR, SYNC & AFFIRM attributes of LOG_ARCHIVE_DEST_n parameter on Standby Database | -Provides highest level of data protection -does not effect the performance - As soon as the redo data is writted to the online redo log file the transacation is committed. -redo is also written to alteast one Standby Database asynchronously - Use network links with sufficient bandwith to get maximum availablitiy with minimal input on performance on pdb - Set LGWR, SYNC & AFFIRM attributes of LOG_ARCHIVE_DEST_n parameter on alteast Standby Database |

Setting the Data Protection Mode of a Data Guard Configuration

Atleast one db should meet the following minimum requirements:

|

| MAXIMUM PROTECTION | MAXIMUM AVAILIBLITY | MAXIMUM PERFOMANCE |

| Redo Archival Process | LGWR | LGWR | LGWR OR ARCH |

| Network transmission mode | SYNC | SYNC | SYNC or ASYNC when using LGWR process. SYNC if using ARCH process |

| Disk write option | AFFIRM | AFFIRM | AFFIRM OR NO AFFIRM |

| Standby redo log required? | YES | YES | NO BUT RECOMMENDED |

Note: oracle recommends that on Oracle Data Guard configurations that is running on maximum protection mode contains atleast two Standby Database meeting the above requirements so that the Primary Database continue processing without shutting down if one of the Standby Database cannot receive redo data from Primary Database.

Managing log files:

1. Specify alternate directory for archived redologs.

- Redo received from Primary Database is identified by location attribute of the parameter LOG_ARCHIVE_DEST_n.

- Alternate directory can be specified by using parameter STANDBY_ARCHIVE_DEST.

- If both parameters are specified then STANDBY_ARCHIVE_DEST overrides LOG_ARCHIVE_DEST_n parameter.

- query v$arvhive_dest to check the value of STANDBY_ARCHIVE_DEST parameter

SQL> SELECT DEST_NAME, DESTINATION FROM V$ARCHIVE_DEST WHERE DEST_NAME='STANDBY_ARCHIVE_DEST';

- Filesnames are generated in the format specified LOG_ARCHIVE_FORMAT=log%t_%s_%r.arc

Note: Redo Transport Service stores the fully qualified domain name of these files in Standby Database control file and redo apply uses this information to perform recovery.

- view v$archived_log

- checking archived redo’s log files that are on the standby system

SQL> SELECT NAME FROM V$ARCHIVED_LOG;

2. Reusing Online Redo Log Files

1. Specify alternate directory for archived redolog files

- redo received from Primary Database is identified by location attribute of the parameter LOG_ARCHIVE_DEST_n

- Alternate directory can be specified by using parameter STANDBY_ARCHIVE_DEST

- If both parameters are specified than STANDBY_ARCHIVE_DEST overrides LOG_ARCHIVE_DEST_n parameter

- Query v$arhive_dest to check the value of STANDBY_ARCHIVE_DEST parameter

SQL> SELECT DEST_NAME, DESTINATION FROM V$ARCHIVE_DEST WHERE DEST_NAME= 'STANDBY_ARCHIVE_DEST';

- Files name are generated in the format specified by LOG_ARCHIVE_FORMAT=log%t_%s_%r.arc

Note: Redo Transport Service stores fully qualified domain name of these files in Standby Database control file & redo apply uses this information to perform recovery.

2. Reusing Online Redolog file:

For reusing the online redolog files we have to set optional or mandatory option with LOG_ARCHIVE_DEST_n parameter

Ex: LOG_ARCHIVE_DEST_3=’LOCATION=/arch_dest MANDATORY’

Note: By Default remote destinations are set to optional.

By Default one local destination is mandatory.

§ If mandatory is specified the archive log files are not overwritten until the failed archive log is applied

§ If optional is specified; even if the redo is not applied the files are over written.

3. Managing Standy Redo log files:

Check the RFS process trace file or database alert log file to determine we have adequate standby redo log files or not.

i.e... If these files indicate RFS process has to wait frequently for a group as archiving is not getting completed than add more log file groups to standby redo log.

Note: when ever an ORL file group is added to Primary Database than we must add the corresponding standby redo log file group to the Standby Database.

If the no. of standby redo log file groups are inadequate then Primary Database will shutdown if it is in maximum protection mode or switch to maximum performance mode if it is in maximum availability mode

Ex: Adding a member to the standby redo log group

Sql> Alter database add standby logfile member ‘/disk1/oracle/dbs/log2b.rdo’ to group 2;

4. Planning for growth & reuse of control files:

§ The maximum control file size is 20,000 database blocks

§ If 8k is the block size (8124) then the maximum control file size will be 156 MB.

§ As long as the archived redo logs are generated or RMAN backups are taken records are added to the control file. If control file reaches its maximum size then these records are reused.

§ Parameter used to specify the time for keeping control file records is control_file_record_keep_time value ranges from 0-365 days (default is 7 days)

§ Note: Keep the control_file_record_keep_time value atleast upto last 2 full backup’s period.

§ In case if redo is planned to apply with delay then set this value to more no. of days.

5. Sharing a log file destinations among multiple Standby Databases:

Ex:

LOG_ARCHIVE_DEST_1=‘LOCATION=disk1 MANDATORY’

LOG_ARCHIVE_DEST_2 =‘SERCIVE=standby1 optional’

LOG_ARCHIVE_DEST_3 =‘SERCIVE=standby2 optional dependency LOG_ARCHIVE_DEST_2’

In this case DEPENDENCY attribute is set to second standby db which takes the redo data from LOG_ARCHIVE_DEST_2.

This kind of setup can be used if

§ Primary Database & Standby Database resides on the same system.

§ Physical Standby Database & Logical Standby Database resides on the same system.

§ When clustered file system is used.

§ When network file system is used

MANAGING ARCHIVE GAPS:

§ Oracle Data Guard resolves the gaps automatically

§ Gaps can happen due to network failure or archiving problem on Primary Database.

§ Primary Database polls Standby Database every minute to detect the gaps [polling mechanism]

§ In case Primary Database is not available then we have to resolve the gaps manually by applying redo from one of the Standby Database.

§ No extra configurations are required to resolve the gaps automatically.

1. Using FAL [fetch archive logs mechanism] to resolve gaps.

Set the parameters FAL_SERVER = net_service_name

FAL_SERVER=standby2_db, standby3_db

FAL_CLIENT=stadnby1_db

2. Manually resolving archive gaps

We have to resolve the gaps manually if Primary Database is not available and if we are using Logical Standby Database (case 1); Also application for some other cases.

Resolving gaps on a Physical Standby Database:

- Query the gap on Physical Standby Database:

SQL> SELECT * FROM V$ARCHIVE_GAP;

THREAD# LOW_SEQUENCE# HIGH_SEQUENCE#

----------- ------------------------ --------------------------

1 7 10

- Find the missing logs on Primary Database and copy them to the Physical Standby Database.

SQL> SELECT NAME FROM V$ARCHIVED_LOG WHERE THREAD#=1 AND DEST_ID=1 AND SEQUENCE# BETWEEN 7 AND 10;

NAME

-------------------------------------------------------------------------

/primary/thread1_dest/arcr_1_7.arc

/primary/thread1_dest/arcr_1_8.arc

/primary/thread1_dest/arcr_1_9.arc

- Once these log files are copied on Physical Standby Database then we have to register them with Physical Standby Database

SQL> ALTER DATABASE REGISTER LOGFILE

'/physical_standby1/thread1_dest/arcr_1_7.arc';

SQL> ALTER DATABASE REGISTER LOGFILE

'/physical_standby1/thread1_dest/arcr_1_8.arc';

- Restart Redo Apply.

Resolving gaps on a Logical Standby Database:

Same procedure as Physical Standby Database but the view used is dba_logstdby_log instead of v$archive_gap

Steps:

a.

SQL> COLUMN FILE_NAME FORMAT a55

SQL> SELECT THREAD#, SEQUENCE#, FILE_NAME FROM DBA_LOGSTDBY_LOG L

WHERE NEXT_CHANGE# NOT IN

(SELECT FIRST_CHANGE# FROM DBA_LOGSTDBY_LOG WHERE L.THREAD# = THREAD#) ORDER BY THREAD#,SEQUENCE#;

THREAD# SEQUENCE# FILE_NAME

---------- ---------- -----------------------------------------------

1 6 /disk1/oracle/dbs/log-1292880008_6.arc

1 10 /disk1/oracle/dbs/log-1292880008_10.arc

Note: If there is a gap then only one file is hsown for each thread. Otherwise it shows two files for each thread

In the above examples missing files are 7,8,9.

b. copy these file on Logical Standby Database location.

c. register these files with Logical Standby Database

SQL> ALTER DATABASE REGISTER LOGICAL LOGFILE 'file_name';

d. Restart SQL Apply

Verification:

1. Check the status of online redofile on Primary Database

SQL> SELECT THREAD#, SEQUENCE#, ARCHIVED, STATUS FROM V$LOG;

2. determine is the most recent archive file on Primary Database

SQL> SELECT MAX(SEQUENCE#), THREAD# FROM V$ARCHIVED_LOG GROUP BY THREAD#;

3. Use the following query on Primary Database to check which is the most recently transmitted archive log file to each destination

SQL> SELECT DESTINATION, STATUS, ARCHIVED_THREAD#, ARCHIVED_SEQ#

FROM V$ARCHIVE_DEST_STATUS

WHERE STATUS <> 'DEFERRED' AND STATUS <> 'INACTIVE';

4. Use the following query on Primary Database to find out the archive redolog files not received at each destination

SQL> SELECT LOCAL.THREAD#, LOCAL.SEQUENCE# FROM

(SELECT THREAD#, SEQUENCE# FROM V$ARCHIVED_LOG WHERE DEST_ID=1)

LOCAL WHERE LOCAL.SEQUENCE# NOT IN

(SELECT SEQUENCE# FROM V$ARCHIVED_LOG WHERE DEST_ID=2 AND

THREAD# = LOCAL.THREAD#);

5. Set log_archive_trace parameter in Primary Database & Standby Database to see the transmission of redo data

Monitoring the Performance of Redo Transport Services

View: v$system_event

Parameter: log_archive_dest_n

Attributes: ARCH

LGWR ( SYNC/ASYNC)

Waits Events to monitor:

- ARCn Wait Events

- LGWR SYNC wait events

- LGWR ASYNC Wait Events

Note: Use OEM to Monitor in GUI for Oracle Data Guard